Bill Joy, 15 years on

by Rob - March 16th, 2015.Filed under: Nonfiction, op-ed.

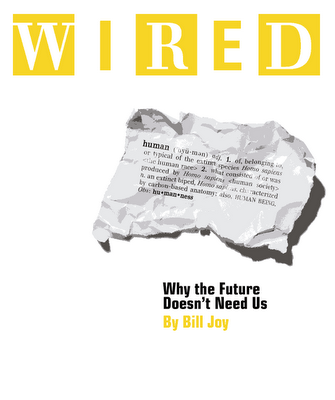

Fifteen years ago this week, Bill Joy‘s famous antitechnology manifesto “Why The Future Doesn’t Need Us” appeared in Wired magazine. The Globe and Mail asked me for an op-ed in response, which appeared fifteen years ago today, on March 16, 2000. Here it is.

Robert J. Sawyer online:

Website • Facebook • Twitter • Email

March 22nd, 2015 at 9:54 pm

It’s interesting that you agreed with Joy about the dangers of AI: then went on to write WWW.

I think you’re wrong (though, admittedly, the costs of ME being wrong might be too high to risk) . You referenced Neuromancer’s Turing organization, which tries to put the brakes on AI, but you missed the far more important point that Neuromancer made: an AI’s own compulsions and needs may (and probably will) be so different from ours as to make us irrelevant to it. Why would it need to fight us? By the time it comes about, the AI will already control most if not all of our resource extraction and manufacturing, so we can’t threaten it. We put out traps when the mice get too annoying, but for the most part we let mice live. AI’s will swat us if we bother them, but for the most part will let us live our lives.

Neither can I agree with Hans Moravec. I don’t think “humanity’s job is to manufacture its own successors.” We might do that. We might manufacture another life form whose path is entirely orthogonal. Or, of course, we might not ever create an AI.

March 22nd, 2015 at 10:06 pm

Well, we agree to disagree. And I certainly didn’t miss anything; that’s an unfair rhetorical trick (“you referenced this part of a book but not that” does not equal “you missed the point”); further, the Turing Police notion was new and clever in 1984 when Bill Gibson put it forward in NEUROMANCER; the notion that AI might have nothing in common with us was hardly new, even then.

In any event, I don’t necessarily agree with your gloss on Gibson’s fictional point about our irrelevancy to AIs, for which there is, as yet, no empirical evidence; sure, it’s possible that AIs will find us irrelevant — but it’s not an axiom that they must. I still think conscious AI is a potentially existential danger to humanity (and so do Stephen Hawking, Bill Gates, and Elon Musk), although it’s not necessarily one — hence the WWW trilogy, mapping out the possibility (but by no means the certainty) of an alternative win-win scenario.